Bucks for Buckets (B4B): Active Defenses Against Stealing Encoders

1CISPA Helmholtz Center for Information Security

2Warsaw University of Technology

3IDEAS NCBR

4Tooploox

*Indicates Equal Contribution

The Growing Risk of Model Stealing

Machine learning models are increasingly exposed via public APIs, making them susceptible to model stealing. Attackers can systematically query these APIs, gathering enough data to reconstruct a nearly identical model at a fraction of the cost. This poses serious security risks, as stolen models can be used for unfair competition, academic plagiarism, or even malicious purposes.

Understanding Encoders: Why Are They Easy Targets?

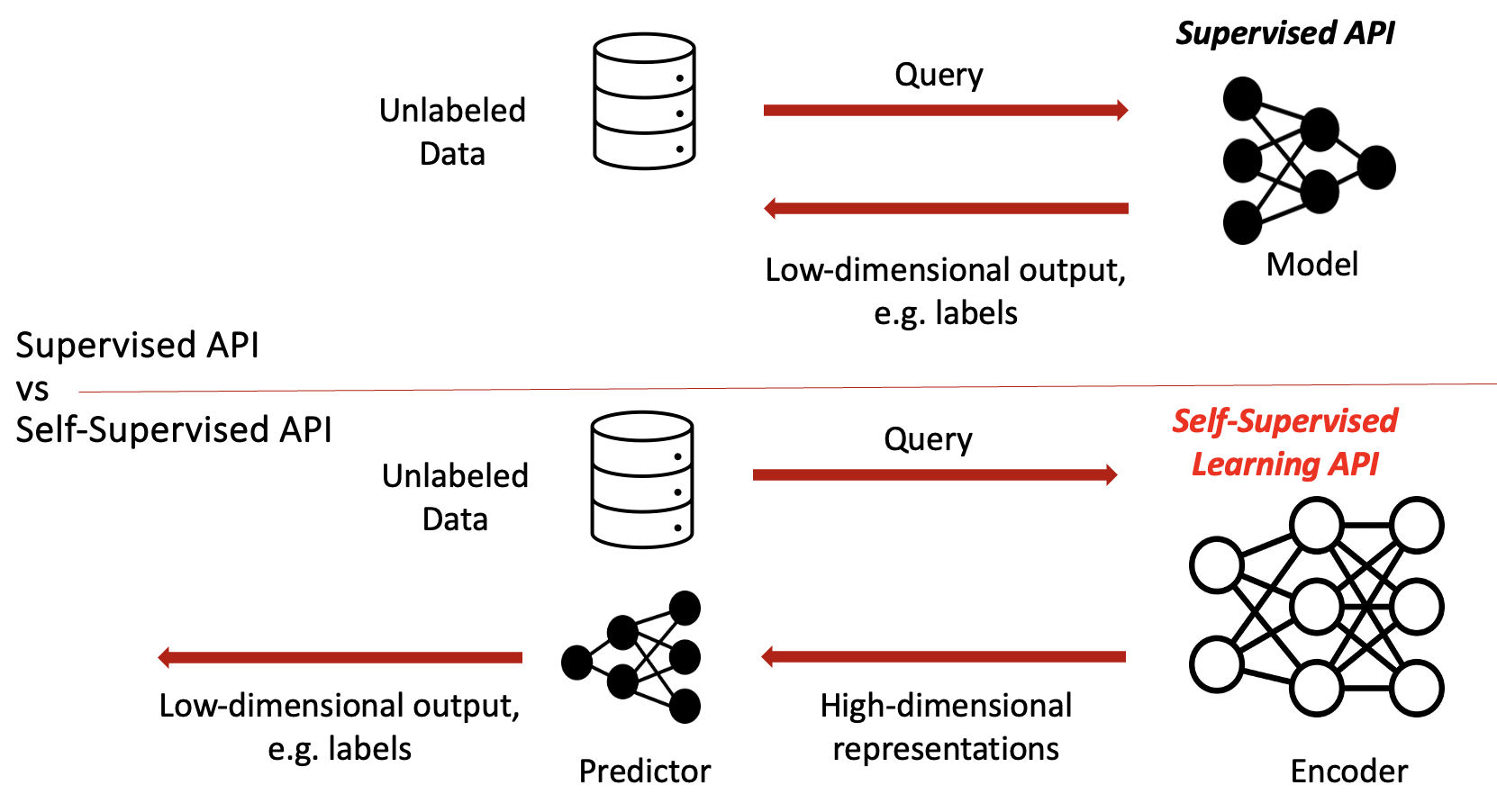

Encoders are a crucial component of modern AI, leveraging Self-Supervised Learning (SSL). Unlike classification models that output simple labels, encoders generate high-dimensional feature representations that capture complex data patterns.

This flexibility also makes them highly vulnerable to theft:

- Their rich outputs leak more information compared to supervised models.

- They require expensive training but can be cheaply stolen with API queries.

- Attackers can efficiently replicate their functionality without needing the original training data.

How Model Stealing Works

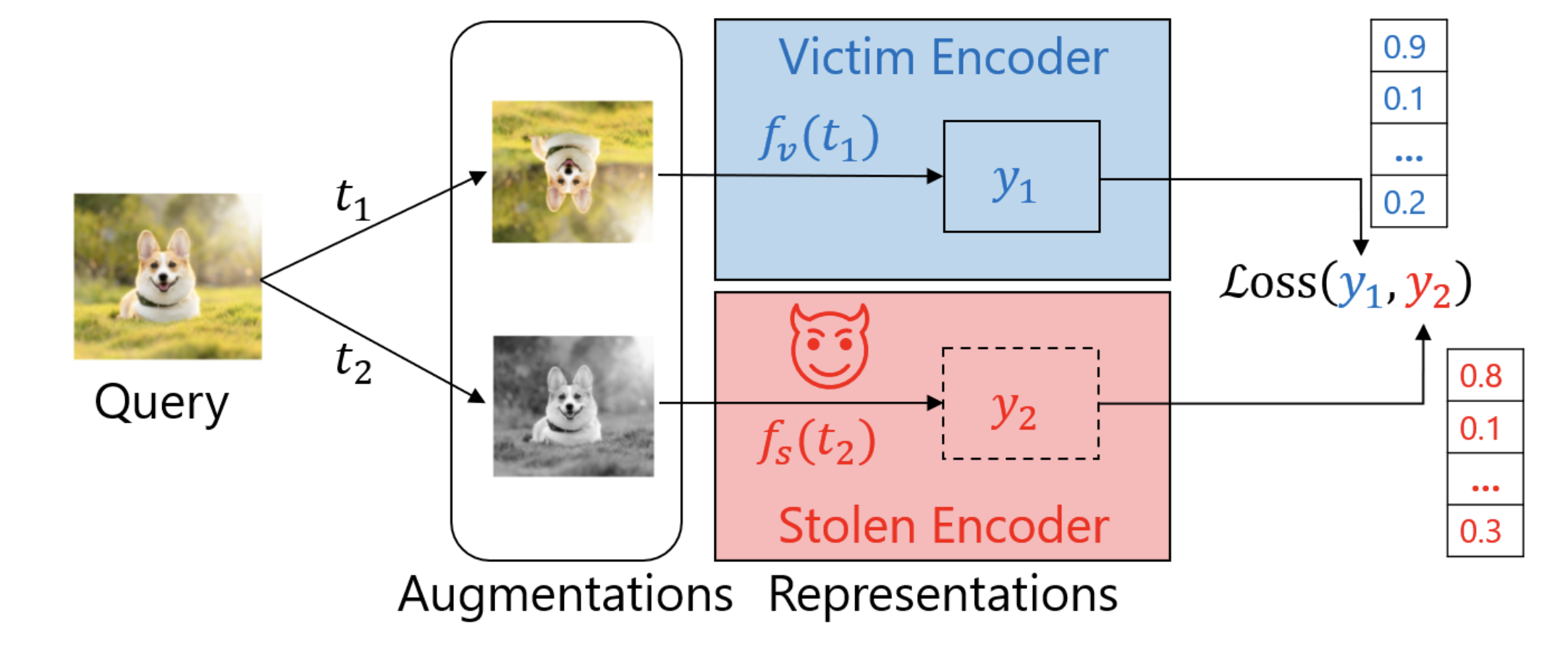

Model stealing attacks exploit the public accessibility of machine learning models by systematically querying them and using the returned outputs to train a substitute model. Attackers typically follow these steps:

- Querying the Model: The attacker sends numerous carefully crafted inputs to the target model via its API.

- Collecting Responses: Unlike classifiers that return class labels, encoders return rich feature representations, which reveal much more information about the model behaviour.

- Training a Stolen Model: The attacker trains a new model using the collected representations, similarly as in the knowledge distillation process.

Because encoders output high-dimensional embeddings, they provide significantly more information per query than classifiers, making them even more vulnerable to extraction attacks.

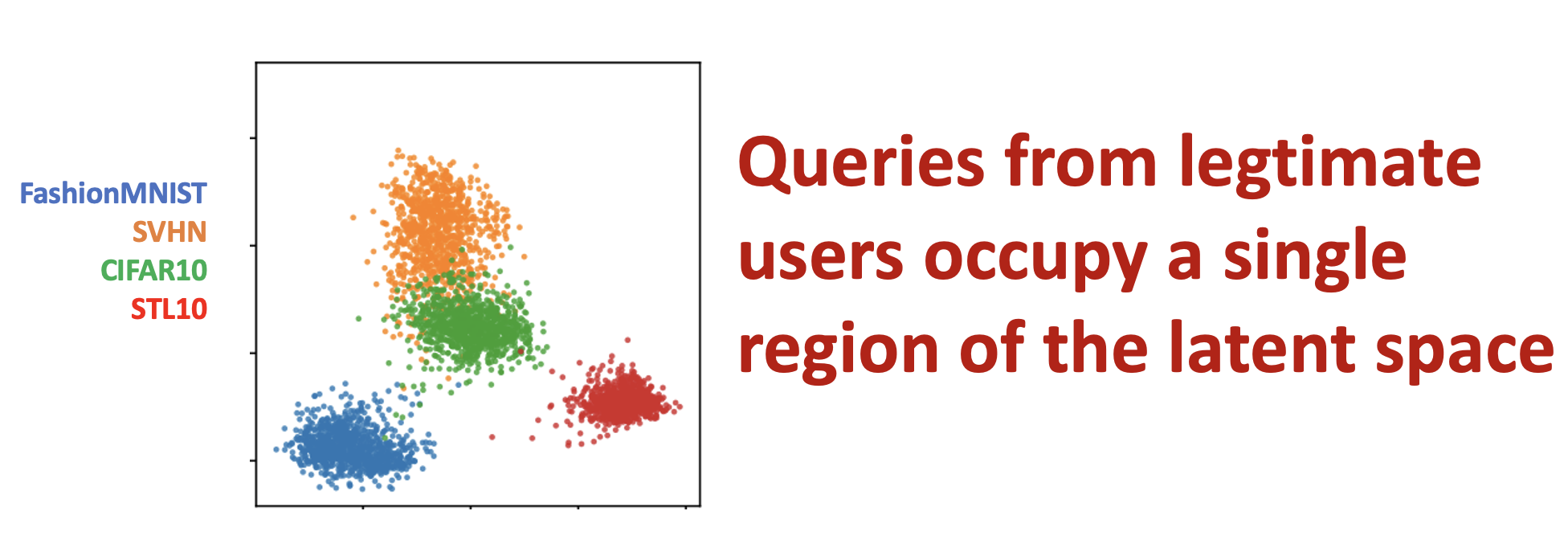

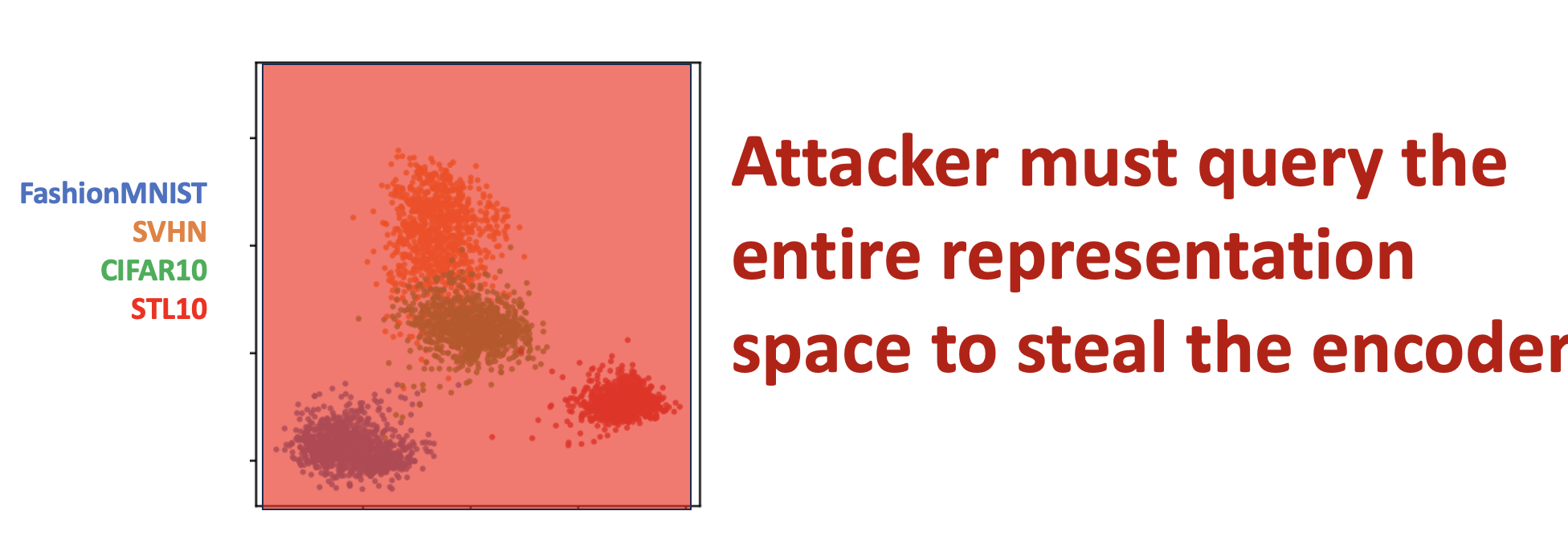

Key Observation: How Attackers Interact with Encoders

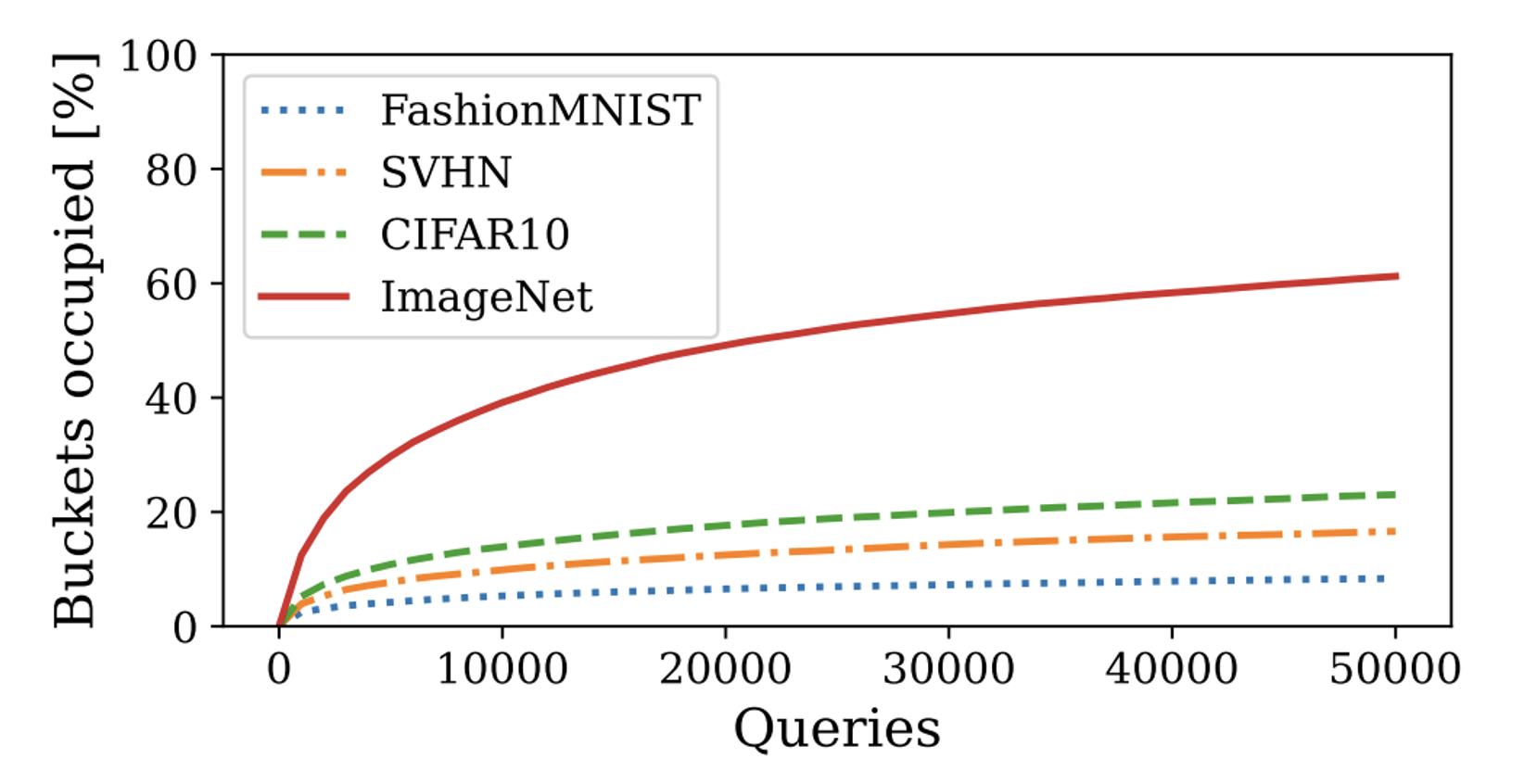

We start from a fundamental observation that attackers and legitimate users interact with the encoder in fundamentally different ways. Legitimate users typically use the encoder to solve a particular downstream task, meaning they operate within a limited and task-specific region of the embedding space. In contrast, attackers trying to steal the model must explore much larger portions of the encoder’s latent space to reconstruct its full functionality.

This discrepancy enables an active defense mechanism: by tracking how much of the embedding space a user explores, we can distinguish between normal usage and adversarial behavior. Attackers will necessarily have queries that span a broader distribution, covering significantly larger portions of the embedding space compared to legitimate users who remain within a specific subspace.

Measuring Latent Space Coverage to Prevent Theft

Building on our key observation, we introduce a method to quantify how much of the latent space a user explores. Instead of treating all queries equally, we divide the latent space into buckets and measure how unique buckets a user’s queries occupy.

To achieve this, we leverage Locality-Sensitive Hashing (LSH), a technique designed to map high-dimensional embeddings into discrete hash buckets. LSH enables us to track how broadly a user’s queries are distributed across the latent space.

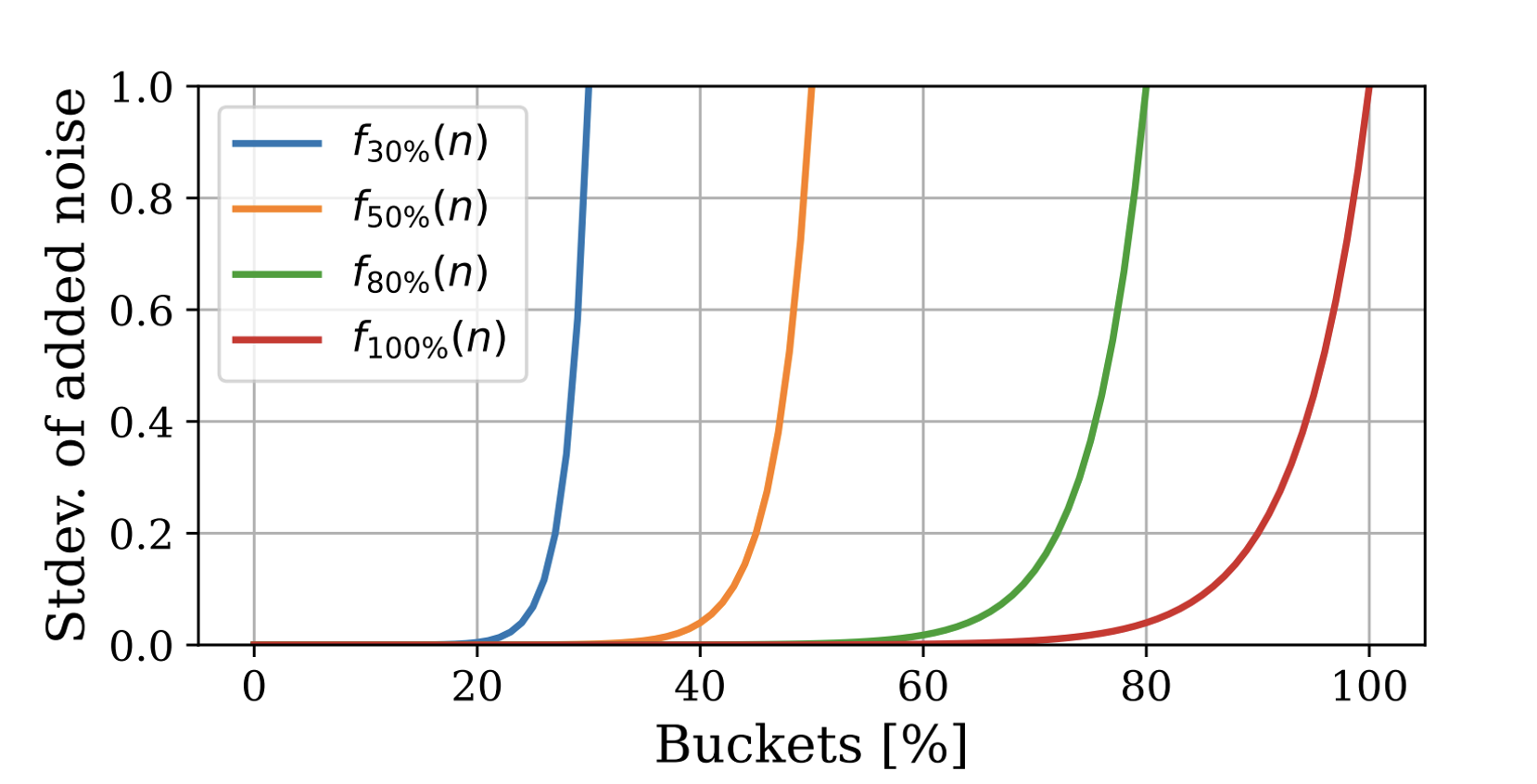

We associate high latent space (buckets) coverage with an extremely high query cost and low coverage with a negligible cost.

B4B: A Way to Prevent Model Theft

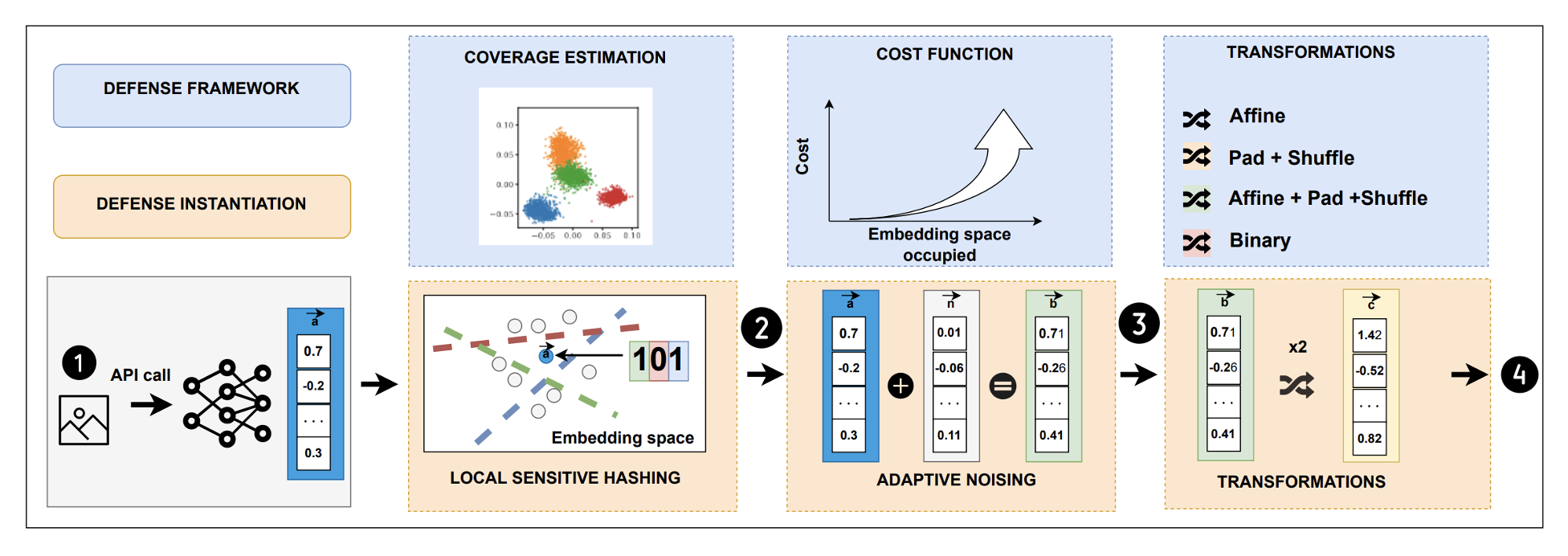

Now, we can describe our Bucks for Buckets end-to-end! B4B is designed to stop attackers as they attempt to steal encoders, ensuring that regular users receive high-quality outputs while making theft prohibitively expensive. It achieves this through three key mechanisms:

- Tracking Query Behavior - B4B estimates how much of the encoder’s embedding space a user is exploring using Locality-Sensitive Hashing (LSH). Legitimate users, who focus on specific tasks, naturally stay within smaller regions, whereas adversaries trying to steal the model attempt to cover much larger areas.

- Dynamic Penalties - Based on the user’s query behavior, B4B gradually introduces noise to the encoder’s outputs, making it harder for attackers to reconstruct the stolen model. This penalty escalates as the adversary’s exploration of the embedding space increases.

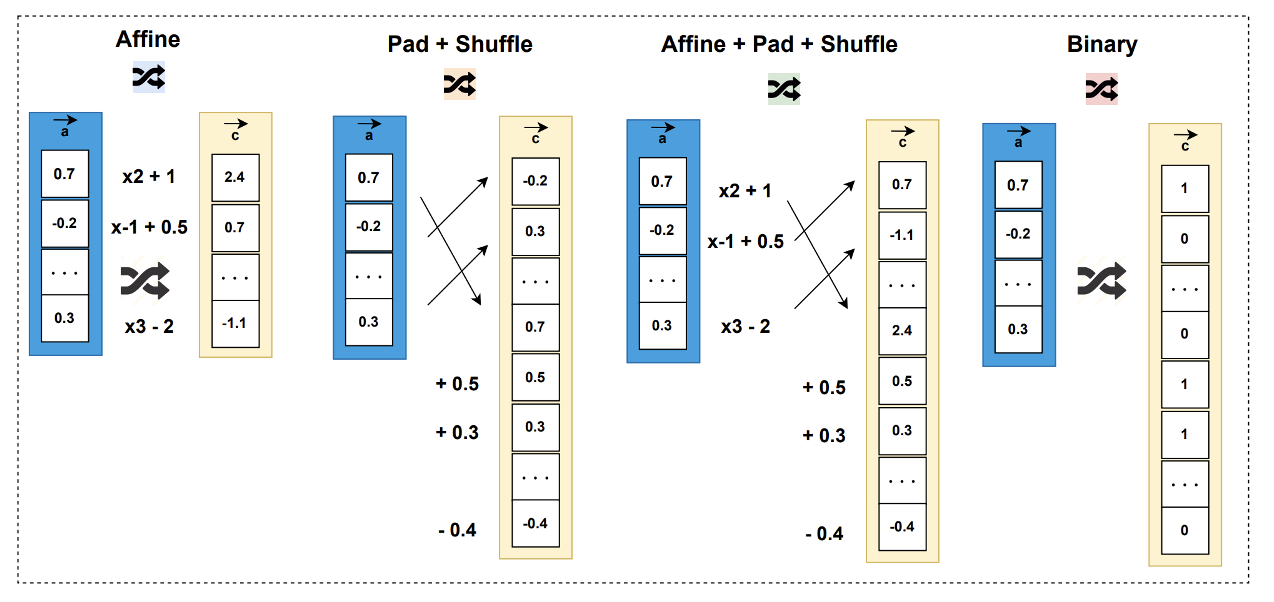

- Personalized Responses - Additionaly, to counter Sybil attacks, where an adversary creates multiple accounts to evade detection, B4B applies unique transformations to each user’s representations. This prevents attackers from merging extracted responses across accounts to reconstruct the encoder.

B4B in Action: Stopping Model Theft Without Compromising Usability

We tested B4B on popular SSL models, including SimSiam and DINO, against cutting-edge stealing techniques. Our findings demonstrate that B4B:

- Reduces stolen model performance by up to 60%, while ensuring legitimate users experience less than a 1% drop in utility.

- Effectively neutralizes Sybil attacks, making it infeasible to aggregate stolen data from multiple accounts.

- Outperforms previous security methods, which either failed to prevent theft or significantly degraded output quality for all users.

Enabling More Secure AI APIs

As AI companies increasingly offer pre-trained SSL models through public APIs, solutions like B4B will be essential in protecting intellectual property. Our modular framework allows for adaptation to various security policies and evolving threats.

For further details, check out our full research paper presented at NeurIPS 2023.

Bibtex

@inproceedings{dubinski2023bucks,

title = {Bucks for Buckets (B4B): Active Defenses Against Stealing Encoders},

author = {Dubiński, Jan and Pawlak, Stanisław and Boenisch, Franziska and Trzcinski, Tomasz and Dziedzic, Adam},

booktitle = {Thirty-seventh Conference on Neural Information Processing Systems (NeurIPS)},

year = {2023}

}